First experiments + Basic Mask Reveal

Category: Experimentation, TouchDesigner

1. Introduction

TouchDesigner is proving to be a fascinating tool for exploring interactive projections. After diving into some foundational tutorials and documentation, I’ve started experimenting with basic motion tracking and masking to understand its potential and limitations.

This first experiment focuses on using a static mask to reveal a hidden image, laying the groundwork for future body-tracking-based interaction.

2. Installing & Understanding TouchDesigner

Before jumping into the masking experiment, I had to familiarize myself with TouchDesigner’s interface.

Since I had never worked with TouchDesigner before, my first step was to get familiar with the interface and core concepts. I explored:

- Node-based programming and how it differs from traditional coding.

- Basic rendering techniques for real-time visuals.

- Interactivity tools, such as CHOPs (Channel Operators) and TOPs (Texture Operators).

After this, upon opening the software, I was presented with a default project showcasing basic image manipulation using TOPs (Texture Operators).

After playing around with the default setup, it quickly became clear that I needed structured guidance to navigate the program effectively.

3. Resources & Tutorials

To get started, I followed several YouTube tutorials and documentation guides, which helped me understand core concepts like TOPs, CHOPs, and DATs.

A crash course:

Basic tutorials with insights in how some nodes work:

Textual learning:

Additionally, I explored the Derivative documentation, particularly the learning tips section, which provided a roadmap for progressing beyond basic interactions:

Learning Tips

I also went through specific courses to strengthen my understanding of TouchDesigner’s fundamentals:

The 100 Series: TouchDesigner Fundamentals

By the end of this research phase, I felt confident enough to set up my first experiment—a simple static mask reveal.

4. Defining My Goals

At this stage, my long-term vision for the project is to build an interactive 3D environment where users can:

- Reveal hidden parts of a scene using their body.

- Trigger interactions by moving their hands over specific areas.

To break this down into achievable steps, I set my first milestone:

Create a basic interaction where a person’s silhouette reveals a hidden image.

- The first experiment will use two static images:

- One background image (always visible).

- One hidden image (only revealed when a mask is applied).

- A static mask input will be used to simulate body tracking.

- The goal is to get a functional masking system before integrating real-time motion tracking.

5. Building My First Masking Scene

I set up a basic TouchDesigner project where:

- A static silhouette mask is used to reveal a hidden image on top of a visible background.

- The mask simulates what would eventually be a live body-tracking mask.

Steps to Build the Masking System

-

Set up three image inputs:

- Base image (default background).

- Hidden image (revealed when the mask is applied).

- Static mask image (temporary stand-in for body tracking).

-

Use a Composite TOP to combine the mask and hidden image:

- Apply an

ATOPoperation to cut out the mask shape from the hidden image.

- Apply an

-

Overlay the cutout hidden image on top of the base image using another Composite TOP:

- This time, use an

OVERoperation to layer the two images correctly.

- This time, use an

Results

-

The static mask successfully revealed the hidden image, confirming that the masking effect works as expected.

-

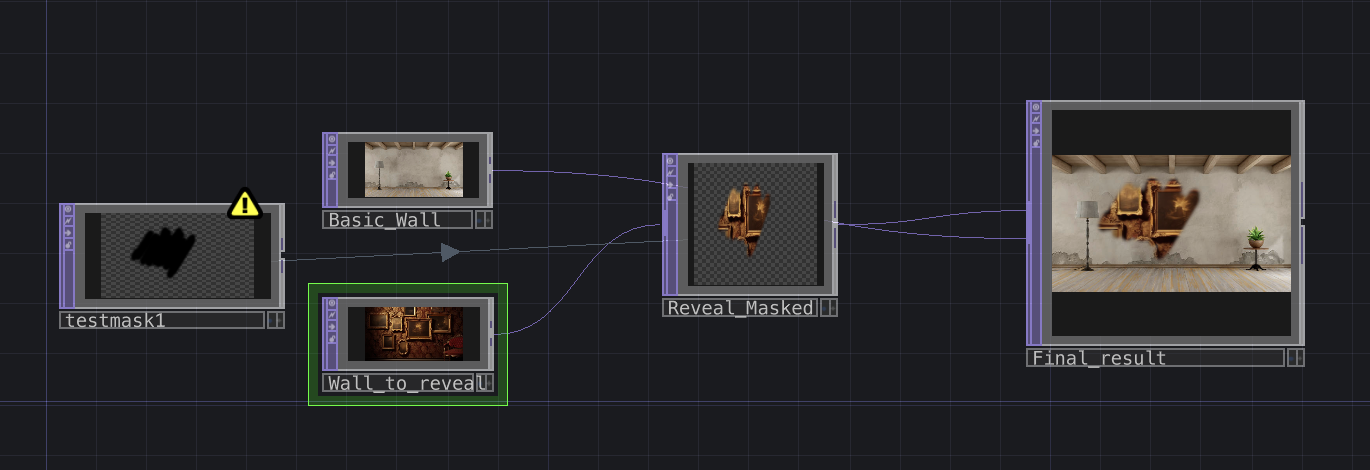

NODE SETUP OVERVIEW

-

END RESULT

Download the project file

6. Next Steps

Now that the basic masking system works, the next challenge is to:

- Replace the static mask with a dynamic body-tracking mask.

- Experiment with different tracking methods (Bodytrack CHOP, Kinect, AI tracking).

- Improve mask responsiveness for a smoother interaction experience.

This experiment was a good first step, confirming that layered image masking is a working approach for revealing hidden content dynamically. Moving forward, the focus will be on making the system react in real-time to a user’s movements.